Formloupe: Explorations into Tangible AR

Formloupe is an augmented reality (AR) experience, situated in furniture retail stores, allowing users to customise and design furniture and rooms. It is the culmination of a master's thesis research project by Ajla Ćano and Daniel Roeven.

AR is a relatively new medium, and new interaction patterns are being still being discovered and created. Questions and topics central to this research project are:

- What can we learn from the wealth of existing research on interaction styles when creating new interaction patterns and models that suit AR?

- What interaction opportunities can this new medium offer that touchscreen interaction can't?

- How can we use this new medium to advance existing interaction styles and overcome their limitations?

By creating various augmented reality designs, we explore, discover, research, and find answers to these questions. Formloupe is the final artefact produced in this research. It demonstrates our suggestions for interaction patterns in the augmented reality space.

Let's begin by looking what challenges AR needs to overcome.

Interaction Challenges of AR

AR operates on new display and visualisation technologies, ranging from portable HUD headsets or glasses, rendering stationary keyboards unusable, to 3D models overlaid on the real world, making two-dimensional mouse or touchscreen input impractical. Mobile AR makes it hard to perceive and interact with all three dimensions, applications often regress to suboptimal touchscreen interaction patterns, and conflicts of attention arise between the AR scene and the output screen1.

Interaction techniques from WIMP2 and touchscreens are not suitable for the AR space, and there is still work to be done discovering and creating suitable interactions and good user experiences in AR.

Formloupe's Application Domain: AR in Retail

Mobile AR, that is, augmented reality on a smartphone, is currently the most widely-available form of AR. But we don't believe it is the future of the medium. It is awkward to view the world through your phone camera, tiring to hold it at a comfortable angle for AR, and it severely limits our field-of-view and interaction possibilities.

The world is too rich and beautiful and full of possibilities and wonder to confine ourselves to viewing it through the tiny slabs of glass that are smartphones.

Other AR technologies, like HUD headsets or glasses, are currently prohibitively expensive for at-home, consumer use cases3.

We want our research to have industrial relevance, but also to push AR interaction design research forward, before prices come down and the hardware becomes affordable for consumers. Therefore, we look at domains where one system can be used and shared by many users over time. Consumer-facing businesses open up avenues of consumer AR research and allow for advanced applications which might be currently be expensive for consumers, but not for businesses looking to differentiate themselves from competitors through enticing user experiences. This project identified a suitable initial testing ground for new AR experiences in brick-and-mortar stores. Possibly experiences made possible through AR could offer novel solutions to common shopping issues, like finding clothes that fit well or previewing items not on display?

Interaction Styles and AR

If interaction patterns in AR are still being discovered, and do not carry the baggage of several decades worth of established mental models, let us consider what we desire of this new interactive medium.

We envision a medium that speaks to us as humans, that is malleable and modeless4. That provides more interaction possibilities than clicking or tapping. That is enticing, exhilarating, fun, and which allows us to be messy and imprecise. You can learn it through exploring, and you can think by simply doing and trying.

We feel strongly about this vision, but we are not the first to think these thoughts. They come from a line of thinking by academics and practitioners across the globe. In particular, we have been strongly influenced by:

Embodied Interaction

Paul Dourish introduced embodied interaction to the design community: "interaction with computer systems that occupy our world, a world of physical and social reality, and that exploit this fact in how they interact with us"5. This means that embodied interaction “exploits our familiarity with the real world, including experiences with physical artefacts and social conversations”6. This strength goes further than making systems more accessible by drawing upon pre-existing knowledge about how the world works: it gives them interactive qualities unattainable by two-dimensional GUIs.

Tangible Interaction

Tangible interaction inherently provides embodied interaction, as the aim of tangible interaction is to shift computing to the physical world. Dourish builds upon the field of tangible computing, and the concept of embodiment can be found in the foundational paper on tangible interaction, intending to “embrace the richness of human senses and skills people have developed through a lifetime of interaction with the real world” 7. Tangible interaction similarly has qualities that are unattainable by 2D GUIs. It opens up for collaboration, thinking-by-doing, and performing several actions at once8.

Fun and Beauty in Interaction

Already in the early 2000s, product design researchers at Eindhoven University of Technology were aiming to help the augmented reality community avoid the pitfalls that the product design community was overcoming. They posit that electronic products and their UIs place a large burden on the user’s cognitive skills (for example, interaction through rows of identical buttons differentiated only by icons or labels), rather than adequately addressing the user’s perceptual motor skills. They present ten points of advice9, which the augmented reality community should heed if they wish to create products that “restore the balance in addressing all of man’s skills”.

- Don’t think products, think experiences.

- Don’t think beauty in appearance, think beauty in interaction.

- Don’t think ease of use, think enjoyment of the experience.

- Don’t think buttons, think rich actions.

- Don’t think labels, think expressiveness and identity.

- Metaphor sucks.

- Don’t hide, don’t represent. Show.

- Don’t think affordances, think irresistables.

- Hit me, touch me, and I know how you feel.

- Don’t think thinking, just do doing.

Twenty years of development within AR later, we still think their advice is highly applicable.

Summing up

These approaches to interaction describe ways to create good user experiences, by exploiting the richness of human senses and skills people have developed through living in the physical world. Relating to these senses and skills makes embodied systems easy to learn and use. They are also natural fit for AR, sharing the goal of interacting with the digital world through the physical. Finally, these interaction styles also possess unique interactive qualities that are not attainable in 2D UIs. Interactive systems that surprisingly or delightfully tie together the physical and digital world offer the possibility to create beautiful and unique user experiences.

Challenges of Embodied and Tangible Interaction

Scalability

One traditional challenge of embodied or tangible interfaces is that of scalability. After all, embodied or tangible interfaces need tangible components, of which there can only be so many. This natural yet hard constraint limits the system's possibilities.

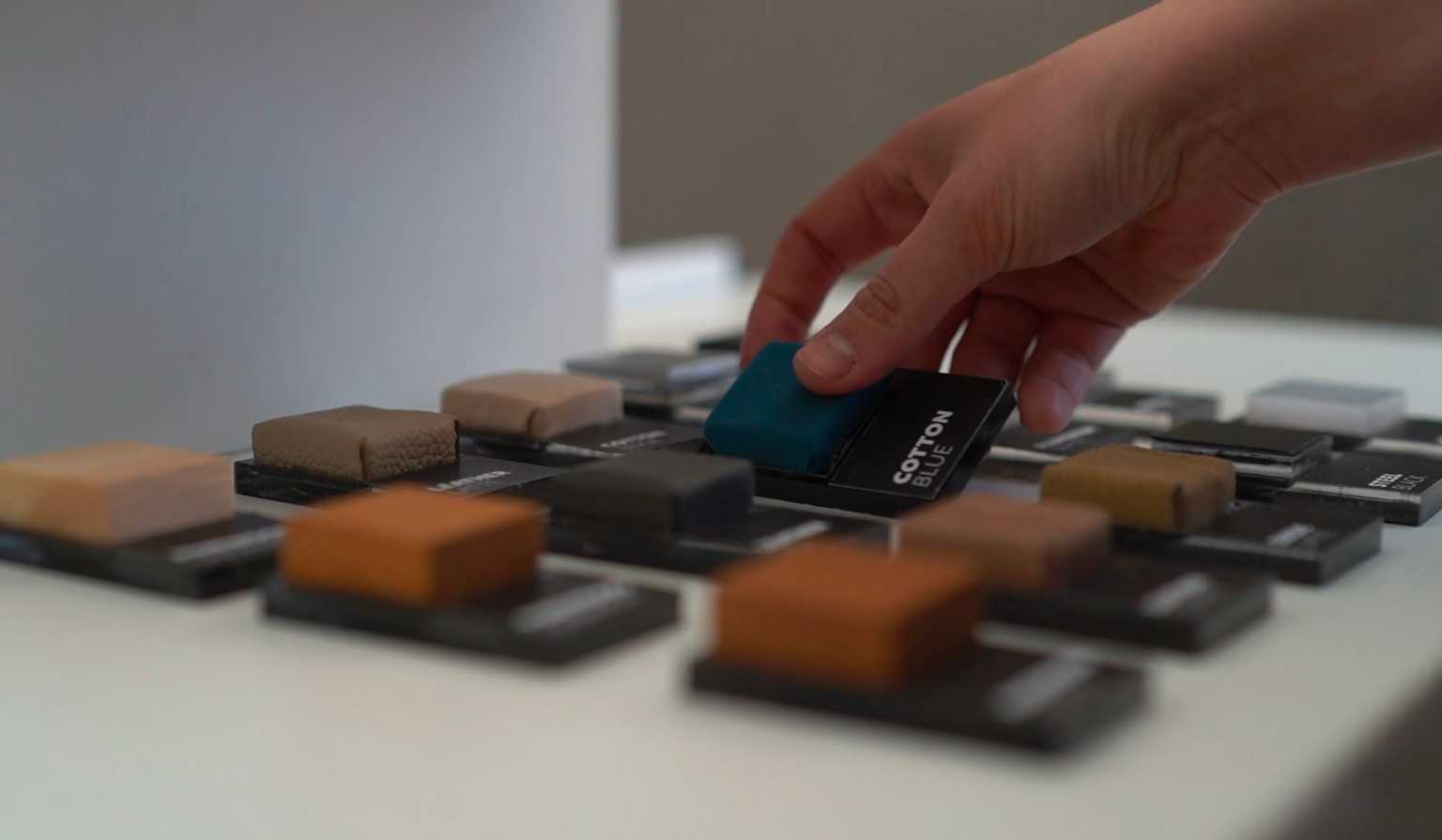

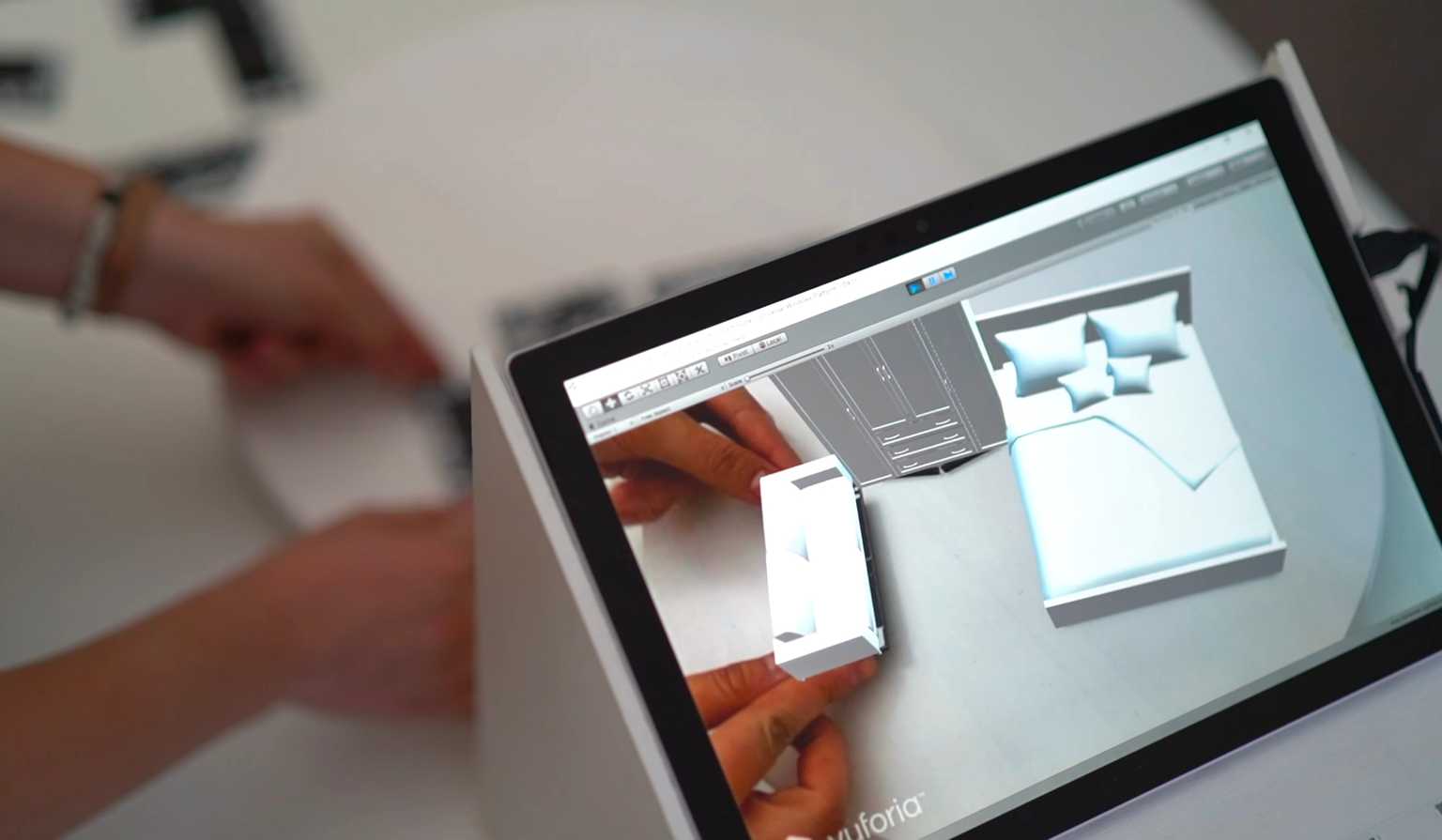

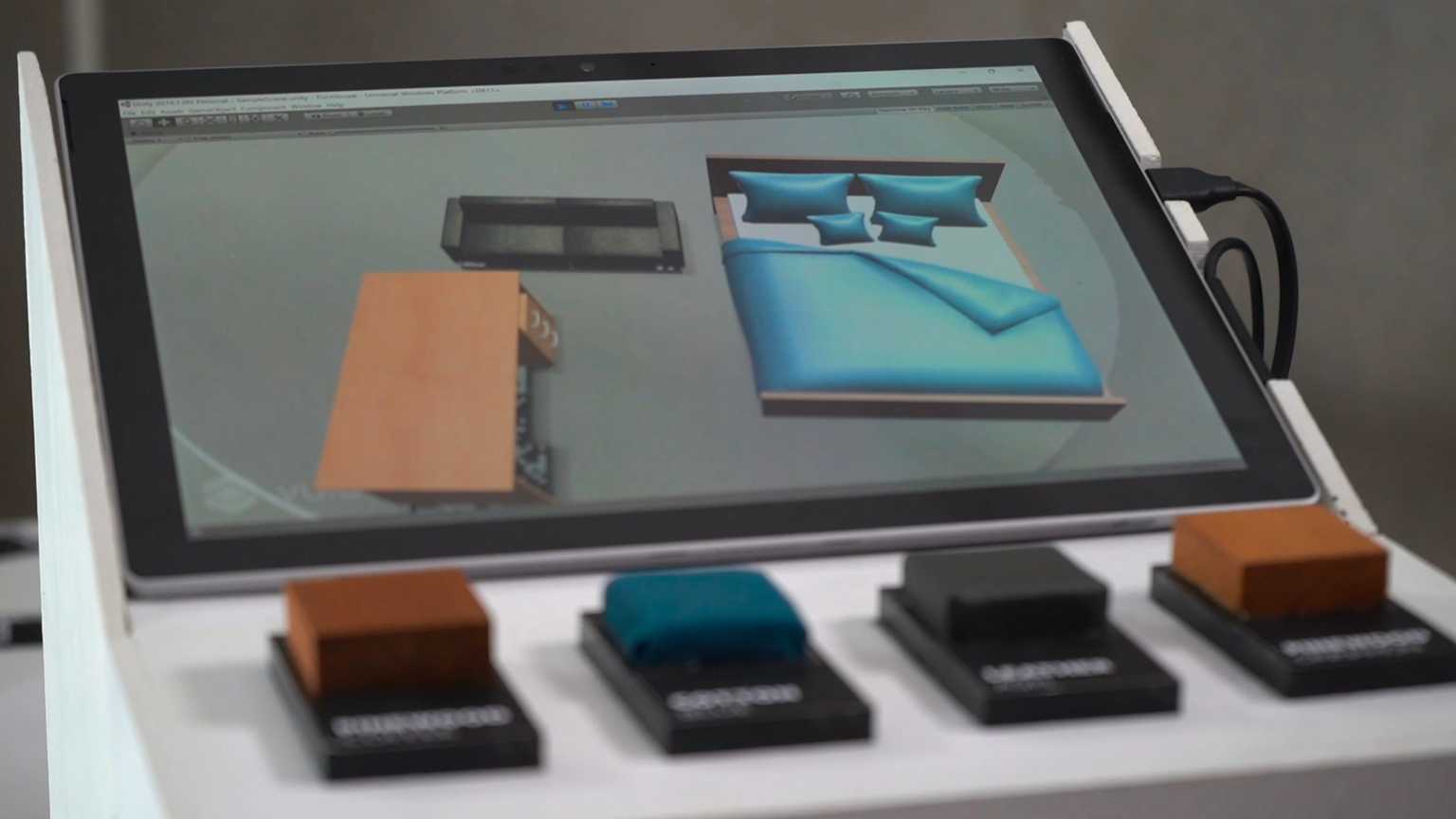

Formloupe hints at a way to overcome such scaling issues through AR. The countless combinations of furniture, positioning, models, and combinations of material are easily represented in AR, making it a platform that combines various graspable input sources into a meaningful embodied user experience. AR's digital representations allows individual graspables to combinatorially explode the output space.

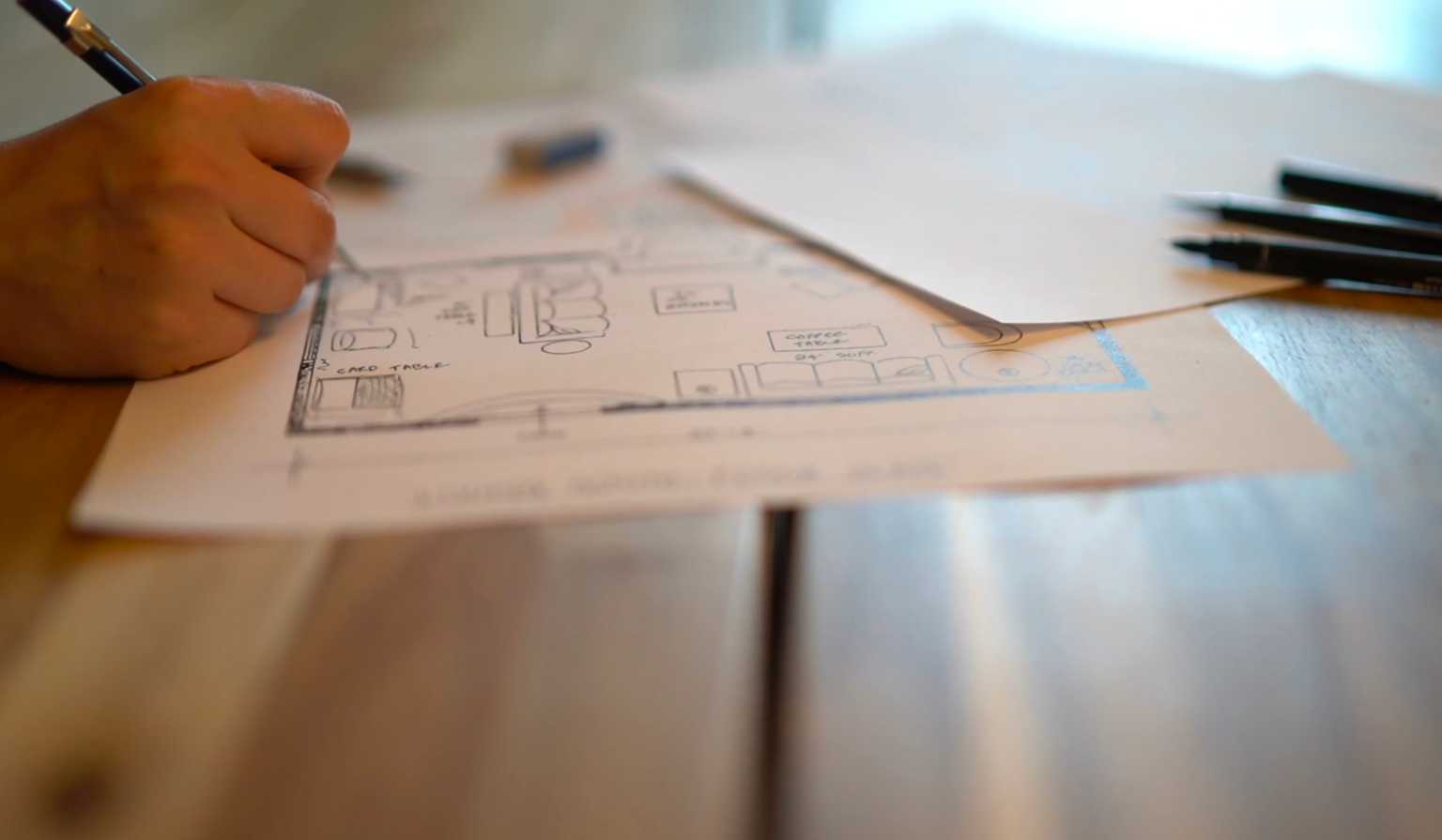

Of course, combinatorial explosion through AR is only one dimension of the scalability problem of tangible and embodied interaction. It works within one application (room planning), but not in others. To take another step up the ladder of scale, we could do two things: either make graspable input tokens even more general, making the tokens useful in other applications (but also making the system less iconic and placing more cognitive burden on the user), or by making them cheaper and c. What if you could draw your floor plan and furniture with pen and paper, and subsequently visualise it in AR?

Freedom of Interaction

Freedom of interaction is a notion described by the same group 10 that provided the ten guidelines on designing rich interactive AR products. The ideal is shared—if not explicitly, then in spirit—by both tangible and embodied interaction. It is about:

- Interaction as an informal assemblage of steps rather than a rote procedure driven by the system

- A myriad of ways to achieve a products functionality.

- No single point of control or interaction: allow the user to act at multiple points at once.

- Allowing easily reversible actions, so that consequences of an action can be easily undone.

We would add one point to this list, and that is about making your product modeless. Do not constrain the user to a certain functionalities, or require them to change mode to access a different set of functionalities.

Multiple ways to achieve a products functionality is often constrained by things like screen space, input hardware, and software complexity. It is more complex to program software to allow actions in any order than to require a certain interaction sequence from the user.

Mouse cursors and the text insertion point are single points of interaction. Even multitouch interfaces seldom allow multiple points of interaction: gestures like pinch-to-zoom are functions that act on one target, while making other simultaneous actions impractical.

As for reversible actions, computers frequently make use of a last-in-first-out undo stack (Ctrl+Z)11. But this linear undo model doesn't allow for undoing a historical action at any point, without also undoing the actions that followed it. Applications that do allow arbitrarily undoing historical actions, such as parametric modelling, are often complicated. Historical edits to your model can be undone or changed, and the "future history" is recomputed automatically, updating your model as desired. However, this requires a precise way of working and setting up your model in a specific way so that the system understand it—rather the opposite of "freedom of interaction".

Freedom of interaction is an appealing idea, but not trivial to realise in digital systems. We believe that the combination of AR and embodied interaction can provide relief here.

Formloupe attains a high degree of freedom of interaction. There is no "first step": start by selecting materials, start by browsing available furniture pieces, or start by previewing different models in AR. After this, play with anything else as the next step. Place more furniture, choose or swap materials, or browse different furniture models. There is no predetermined sequence of actions one must follow. This enables users to create their own workflow. Collaborate, where one user places furniture while another browses models? And a third yet selects material? Or select materials together that go well together and fit your home first, before planning your desired room and furniture? Apply and try all of the materials (quickly!) and discard the ones you don't like?

A simpler-to-implement, but harder-to-use interaction model would be where a user has to first select an furniture piece, then a material, and which furniture to apply it to (and then throwing errors when the user selects the "wrong" furniture, like leather on a cabinet!). Instead, Formloupe just applies the material to all applicable furniture pieces, both those present in the scene, as well as future furniture pieces added afterwards. Switching material is now indeed as simple as removing one material, and adding another. No modes needed.

Sometimes, Formloupe cannot infer what you intend. For example, a cabinet in beech, but a bed in walnut? Pick beech, and it will be applied the cabinet and the bed. Now pick walnut, and Formloupe will allow you to pick which furniture pieces it should "override". Regret the override? Just remove walnut. Alternatively, want everything in walnut? Remove the beech.

Never does the system enter a "blocking" state, where it needs you to resolve a conflict before continuing. Every interaction is always valid and meaningful. Even during overrides, when the system explicitly asks something of the user, Formloupe doesn't enter a "mode". Adding or removing furniture or materials still works, you just additionally have the possibility of assisting with more information.

So what are the ingredients of interaction that unlock this freedom of interaction? We identify the following reasons:

- Tangible AR allows you to offload the system state to the physical environment. The placement of furniture is not determined by coordinates in the software, but by where your pieces are on the table. Material selection is not determined by bits on a machine, but by placing a material on a meaningful spot. Storing information in the environment opens up simultaneous actions (multiplexed in space), while AR augments the environment with meaningful information (compositing furniture and material into a room design). This also means you can leverage physical constraints: furniture tokens collide but don't intersect, and the system has fewer "error" states to encounter.

- You can't hit pause on reality: tangible AR is always in actionable mode. Tangible tokens can always be moved; the system can't crash or throw an error at you for doing things "incorrectly". This places very different constraints on interaction designers. Actions you take must always be meaningful input. This prevents getting the user stuck in modes, and allows for actions in any order.

- Easy undo follows from deterministic AR state follows from meaningful, embodied interaction. Room planning with Formloupe is a meaningful activity even without an AR representation. Furniture placement and material selection is meaningful without a computer "acknowledging" you. This means that the AR representation is almost entirely determined by the semantics of your physical environment. This "deterministic" quality gives rise to easy undo: remove a furniture piece or material, and the system can always recover. Given a certain physical configuration, there is a given AR representation. Historical order does not matter, and undoing any action, be it the first one, the last one, or any in between, is easy to achieve and understand.

- AR can resolve the tension between freedom and complexity. Freedom of interaction is easier to achieve in simpler products. As functional complexity grows, freedom of interaction is harder to maintain. The fewer possible outputs a product has, the easier it is to map multiple inputs, in varying sequences to the few outputs. AR resolves this tension by combinatorial explosion: a few input tokens are combinable in myriad ways, generating limitless room design outputs.

Solving AR Interaction Challenges

Challenges in AR previously mentioned are “regression to familiar smartphone interactions”, “scene over screen”, and “three-dimensional objects in a two-dimensional space”.

The regression to familiar smartphone interactions has been observed and resolved in Formloupe evaluations. Users build a living room out of minifigures, and previewed their design on the screen. We have sometimes seen that they try to rotate the room by swiping on the tablet display. The users realized immediately, often humorously, that rotating the room is done by rotating the floor plan physically. We surmise this to be solved so quickly because the users have previously physically built their room on the floor plan, and thus already have experienced the embodied interaction, leading them to immediately solve this problem in an embodied way too.

The scene over screen problem is solved similarly. Embodied interactions can take place physically within the scene. This alleviates the issue of needing to interact much with the screen at all. Interestingly, it tips the balance so much in favour of embodied interactions (in the scene), that touchscreen interactions sometimes go undiscovered (for example, switching between different furniture models by tapping).

The final challenge, that of three-dimensional objects in a two-dimensional space, can be alleviated by grounding the interactions in an embodied style using all three dimensions. Like described above, users interact with three-dimensional minifigures before previewing it in a two-dimensional AR representation, and as such, have no trouble understanding the three-dimensional nature of the scene.

Closing Thoughts

Through the development of Formloupe, we have uncovered and identified various benefits of combining AR with embodied and tangible interaction:

- AR can be used for creative, production work, not for just content consumption or location-based overlays like navigation. Unlike VR, which is typically more associated with creative production work, Tangible AR allows for grounding interactions in the physical world, because it does not obscure our environment.

- Tangible AR provides rich and enticing interfaces that speak to our senses and our physical and motor skills, thereby identifying a way forward for embodied, humane interaction in a new digital medium.

- Tangible AR suggests a solution to the scalability problem tangible interaction: graspables that create myriad possibilities through combinatorial explosion.

- Tangible AR removes some hurdles typically faced when creating interfaces with high freedom of interaction.

- Tangible AR provides a way to overcome well-known AR interaction problems, like regressing to smartphone interactions, and the attention problem between scene and screen.

- Woo, F. H., & Cheng, J. (2018, March 8). Early Challenges in AR UX - Library. Google Design. https://design.google/library/augmented-reality-ux-design/↩↩↩↩

- Windows, Icons, Menus and Pointers, a term describing the predominant interaction style on desktop and laptop computers.↩↩↩↩

- VR Intelligence & Superdata. (2018, December). XR Industry Survey 2018. VRX Conference & Expo.↩↩↩↩

- A mode is a state in user interfaces in which inputs are interpreted differently than they would otherwise, requiring the user to switch back and forth between modes to unlock the full functionality↩↩↩↩

- Dourish, P. (2001). Where the action is: the foundations of embodied interaction. MIT press.↩↩↩↩

- LaViola Jr, J. J., Kruijff, E., McMahan, R. P., Bowman, D., & Poupyrev, I. P. (2017). 3D user interfaces: theory and practice. Addison-Wesley Professional.↩↩↩↩

- Ishii, H., & Ullmer, B. (1997, March). Tangible bits: towards seamless interfaces between people, bits and atoms. In Proceedings of the ACM SIGCHI Conference on Human factors in computing systems (pp. 234-241).↩↩↩↩

- Shaer, O., & Hornecker, E. (2010). Tangible user interfaces: past, present, and future directions. Now Publishers Inc.↩↩↩↩

- Djajadiningrat, J. P., Overbeeke, C. J., & Wensveen, S. A. (2000, April). Augmenting fun and beauty: a pamphlet. In Proceedings of DARE 2000 on Designing augmented reality environments (pp. 131-134).↩↩↩↩

- Wensveen, S. A., Djajadiningrat, J. P., & Overbeeke, C. J. (2004, August). Interaction frogger: a design framework to couple action and function through feedback and feedforward. In Proceedings of the 5th conference on Designing interactive systems: processes, practices, methods, and techniques (pp. 177-184).↩↩↩↩

- A linear undo stack is a data structure allowing to undo items in reverse chronological order.↩↩↩↩