"Point" Smarthome Remote App

Smarthome controls are clunky, so Bastian Andelefski developed an alternative. With precise indoor positioning technology (using the ultra-wideband chips in modern phones), one can point at any connected light bulb (or other smarthome device, like a thermostat), and relevant controls will pop up on the phone.

It's an intuitive way of turning on and off smart home lights—certainly a lot better than scrolling through a list of connected lights and reading the lamp names to find the one you want to toggle. I think it nicely exemplifies how ubiquitous computing (in this case, a mobile phone + ultra-wideband positioning beacons + smart light bulbs) can give rise to embodied interaction: the bodily movement required, both in pointing and in walking around, clearly reduces the unnecessary cognitive burden of a UI with buttons and labels, and replaces it with an interface that you operate with your motor skills. Said differently, it allows you to think with your body.

The word intuitive tends to be a bit overused in our field, but "Point" I think serves as a good example of a definition I like: interactions with natural coupling between action and reaction. Natural coupling is achieved when action and reaction are coupled in time, location, direction, dynamics, modality, and expression1. This definition helps us think about how to create intuitive interactions, and includes complex interactions that are still performed intuitively (i.e. playing the guitar or violin).

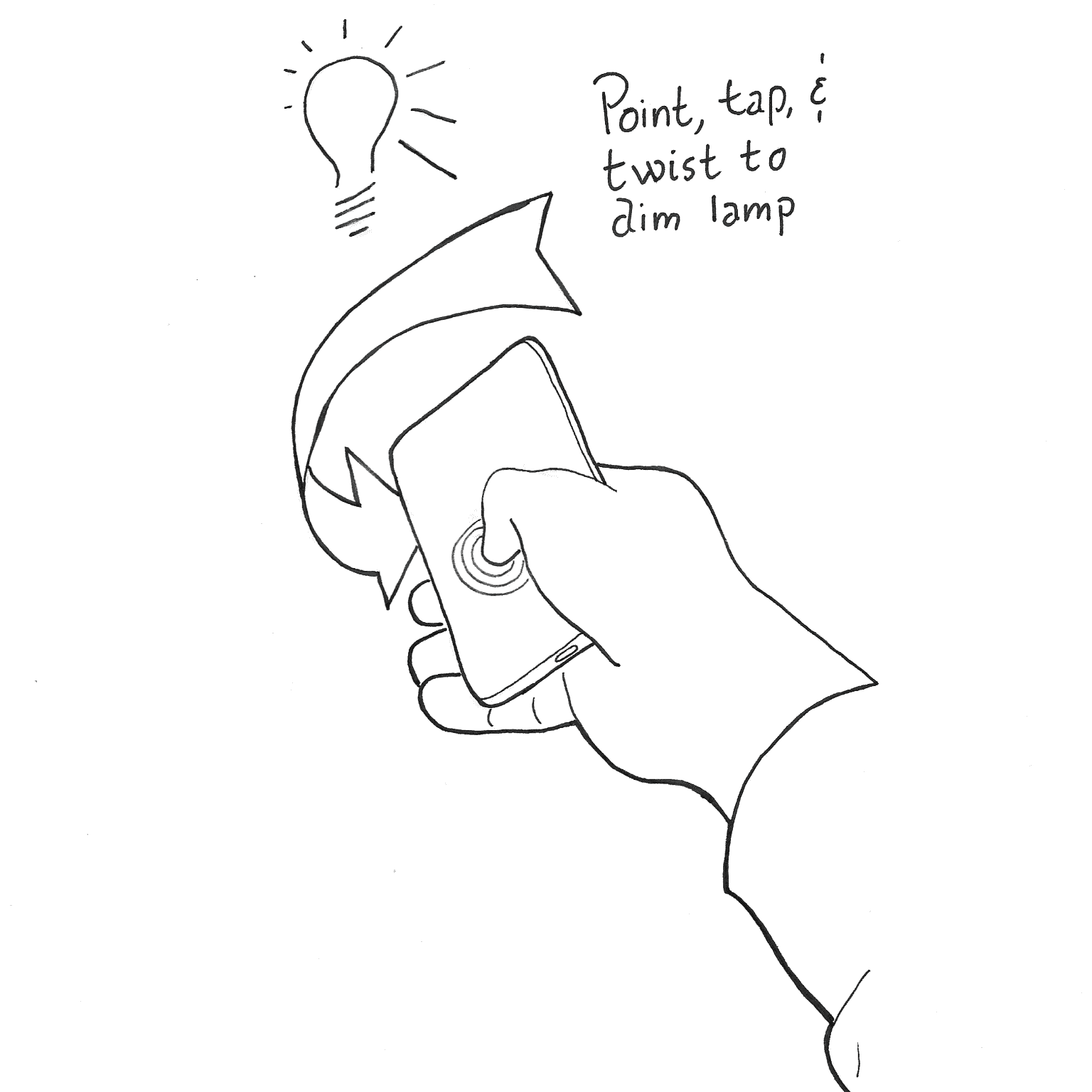

That said, intuitive interaction is not necessarily easy to use—just like, in the extreme example, violins are not easy to use. It might feel great to point at lamps, but if you are lying in bed and need to turn off the light in the kitchen, having only this app available would render smart lamps useless. So it seems "intuitiveness" is not a panacea. Furthermore, needing to perform a precise drag gesture on the screen, without looking at the screen, and without physical feedback, seems like it might become problematic—the same problem that people have with Tesla's touchscreen center stack or Apple's TV remote. Affordances in the shape of physical buttons allow you to find your way without looking. Perhaps "Point" could lean in to more gestures, such as twisting?

- Wensveen, S. A., Djajadiningrat, J. P., & Overbeeke, C. J. (2004, August). Interaction frogger: a design framework to couple action and function through feedback and feedforward. In Proceedings of the 5th conference on Designing interactive systems: processes, practices, methods, and techniques (pp. 177-184).↩↩